mpi example code

For a basic MPI-C program the first bit of the program looks like this including the MPI header and some variables declared. MPI_Comm_rank MPI_COMM_WORLD.

Forcepotentialkinetic write potential kinetic potential kinetic - E0E0 call update npartsndimpositionvelocityforceaccelmassdt enddo end.

. Below is the application source code mpi_samplepy. The mpiexec adds each of the processes to an MPI communicator which enables each of the processes to send and receive information to one another via MPI. In this tutorial we will name our code file.

The example example1jlwhich accompanies this paper illustrates this. Cd codecalculate_piserial ls Makefile calculate_pic mainc protoh Load the relevant Intel compiler modules and then build the code with make. 得到当前进程号 MPI_Comm_size MPI_COMM_WORLD.

Using MPI with Fortran. Axper mpi_samplec Created 8 years ago Star 0 Fork 0 mpi sample code Raw mpi_samplec include include void errhandler_function MPI_Comm communicatior int error_code printf ERROR HANDLED. Each process runs the code in example1py independently of the others.

得到总的进程数 MPI_Get_processor_name processor_name. The tutorials assume that the reader has a basic knowledge of C some C and Linux. We are going to expand on collective communication routines even more in this lesson by going over MPI_Reduce and MPI_Allreduce.

Note that if the running time of the program is too short you may increase the value of FACTOR in the source code file to make the execution time longer. Go Sending and Receiving data using send and recv commands with MPI. If I am processor A then call MPI_Send X else if I am.

The first thing to observe is that this is a C program. Include include int mainint argc char argv return 0. Hpc MPI-Examples Public master 1 branch 0 tags Code 3 commits Failed to load latest commit information.

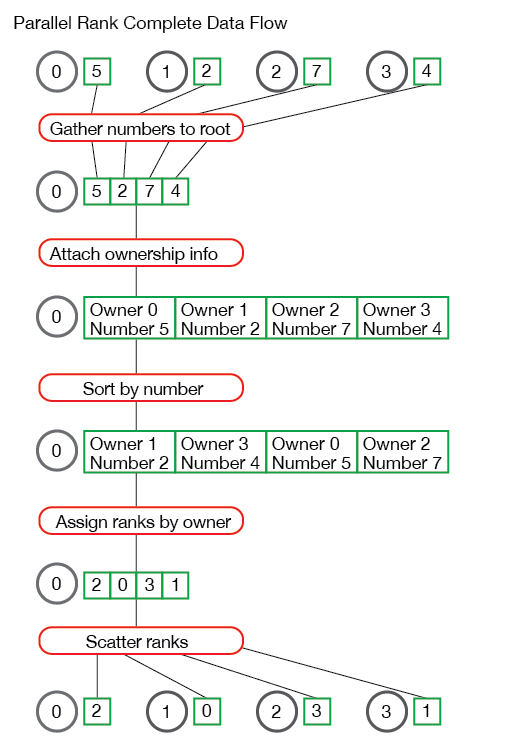

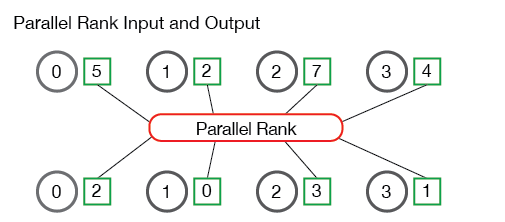

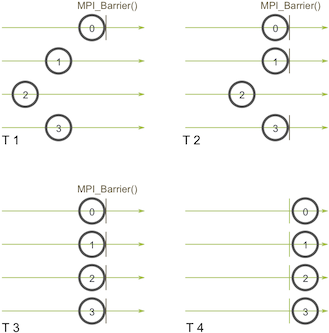

In the previous lesson we went over an application example of using MPI_Scatter and MPI_Gather to perform parallel rank computation with MPI. In these tutorials you will learn a wide array of concepts about MPI. The pseudo-code for the partial sum of pi for each iteration would be.

The code for this exercise is in the repository in the codecalculate_pi subdirectory. Well be working in the serial subdirectory. Working from the repository The code contains two subdirectories.

Sudo apt-get install python-numpy 2 After successful completion of the above step execute the following commands to update the system and install the pip package. Most new MPI users will probably want to know how to send and receive messages. Install MPI on Ubuntu 1 Step No.

Using MPI now in its 3rd edition provides an introduction to using MPI including examples of the parallel computing code needed for simulations of partial differential equations and n-body problemsUsing Advanced MPI covers additional features of MPI. Below are the available lessons each of which contain example code. Example 2 Basic Infrastructure We will now do some work with the the example in examplesmpiaverage which does some simple math.

These two books published in 2014 show how to use MPI the Message Passing Interface to write parallel programs. Mpi sample code GitHub Instantly share code notes and snippets. Open hello_world_mpicpp and begin by including the C standard library and the MPI library and by constructing the main function of the C code.

Copy the following line of code in your terminal to install NumPy a package for all scientific computing in python. Go Dynamically sending messages to and from processors with MPI and mpi4py. If MPI_Init.

Go Getting network processor size with the size command. This package builds on the MPI specification and provides an object oriented interface resembling. It might not be obvious yet but the processes mpiexec launches arent completely unaware of one another.

For example it includes the standard C header files stdioh and stringh. If I am processor A then call MPI_Send X else if I am. Bucket-sort goldberg-conjecture image-manip integration mandelbrot matrix-multipy metal-plate moores-algorithm nbody newton-type-optimization prefix-sum README README These are just examples that I used for learning.

This is the main time stepping loop do i1nsteps call compute npartsndimboxpositionvelocitymass. For nodesprocs 11 pi1coutput for C OR pi1foutput for F90. Include include include int mainint argc char argv No MPI call before this MPI_Init.

Pi_mpic for C OR pi_mpiac for C on Argo data input in code OR pi_mpi_cppc for C untested OR pi_mpif for F90 TCS MPI Pi Computing Output. Installed the MPI package is installed by executing Pkgupdatethen PkgaddMPI from the Julia prompt. In this example the value of FACTOR is changed from 512 to 1024.

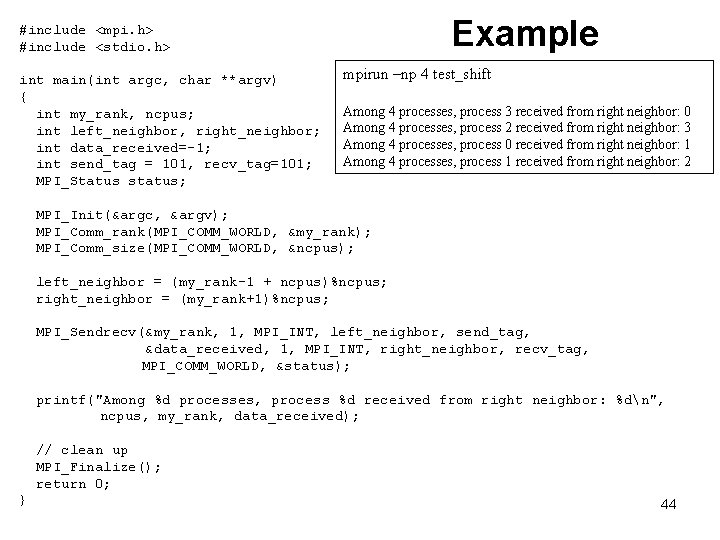

Here is a simple example of what a piece of the program would look like in which the number X is presumed to have been computed by processor A and needed by processor B. In this tutorial we will be using the Intel Fortran Compiler GCC IntelMPI and OpenMPI to create a. Click for MPI Pi Computing Code.

It also has the main function just like any other C program. Using MPI_Pack to share data Sending in a ring broadcast by ring Using topologies to find neighbors Finding PI using MPI collective operations Fairness in message passing Implementing Fairness using Waitsome A Parallel Data Structure Using nonblocking operations Shifting data around Exchanging data with MPI_Sendrecv A simple Jacobi iteration. For those that simply wish to view MPI code examples without the site browse the tutorialscode directories of the various tutorials.

Parallel programs enable users to fully utilize the multi-node structure of supercomputing clusters. Introduction and MPI installation MPI tutorial introduction 中文版 Installing MPICH2 on a single machine 中文版. Run the code now.

This document describes the MPI for Python packageMPI for Python provides Python bindings for the Message Passing Interface MPI standard allowing Python applications to exploit multiple processors on workstations clusters and supercomputers. Greetings from s rank d out of d processor_name world_rank. Heres the code for sct2py.

Note - All of the code for this site is on GitHubThis tutorials code is under tutorialsmpi. Here is a simple example of what a piece of the program would look like in which the number X is presumed to have been computed by processor A and needed by processor B. Using conditional Python statements alongside MPI commands example.

得到机器名 if world_rank 0 sprintf msg MPI. Message Passing Interface MPI is a standard used to allow different nodes on a cluster to communicate with each other. For example when initializing MPI you might do the following.

This_bit_of_pi this_bit_of_pi 10 10 i-05 N i-05 N.

C Mpi Scatter Send Data To Processes Stack Overflow

Performing Parallel Rank With Mpi Mpi Tutorial

Writing Matlab Programs Using Mpi And Executing Them In Lomond

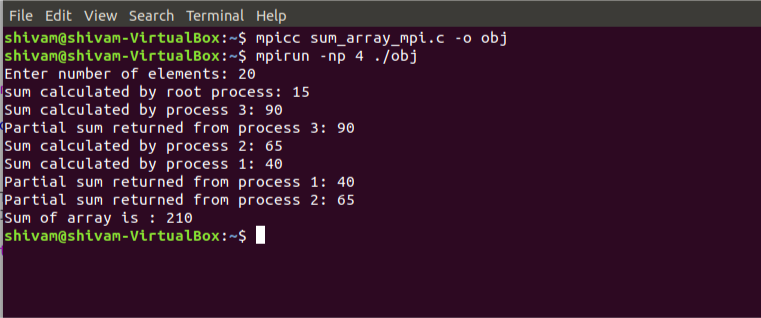

Sum Of An Array Using Mpi Geeksforgeeks

Fortran With Mpi 003 Hello World Program Youtube

Message Passing Interface An Overview Sciencedirect Topics

C Mpi Scatter Send Data To Processes Stack Overflow

An Example Of A Program Parallelized Using Mpi Download Scientific Diagram

Mpi Send Mpi Recev With C In Mpi Library Youtube

What Is Mpi Q Mpi Message Passing Interface

Performing Parallel Rank With Mpi Mpi Tutorial

Programmer S Journal Open Mpi On Ubuntu

C Mpi Scatter Send Data To Processes Stack Overflow

Mpi Broadcast And Collective Communication Mpi Tutorial

Sample Of Assembly Code For Mpi Program Download Scientific Diagram

Sample Event Loop In The Conceptual Generated C Code Specialized For Download Scientific Diagram

Comments

Post a Comment